With the recent rise of large language models (ChatGPT), I started dreaming and wondering whether something like Iron Man’s Jarvis was now within reach… Perhaps even able to hook into HomeAssistant and control my smart home?

Right now with the state of the art we have:

- Near real-time voice to text transcription

- Large Language Model capable of understanding human intent

- Near real-time text to voice synthesis with realistic voices

In particular, I found that the OpenAI GPT turbo3.5 model performs well enough, and has a way to define functions that it magically knows how to call depending on context.

Also, since the GPT models seem to have been trained on a large corpus of programming knowledge and concepts, it quite easily can handle translating human intent into actions that a computer can execute. The training even seems to include knowledge about HomeAssistant itself since the project has been around since before the models were trained!

– TODO: Demo clip

Next I’ll show you how you too can put something like this together, though do note that the Python snippets below are not complete and are really just used to show how the pieces fit together.

Ears to Listen With 👂

The first piece of magic is that the assistant needs to be able to interpret human voice and convert it into text.

For this I decided to try out Azure’s Cognitive Services API, which is used to power real-time transcription in video streams, meaning the latency should be very low.

To get this working you first need to apply some basic configuration and tell it which input audio device to use:

import azure.cognitiveservices.speech as speechsdk

speech_config = speechsdk.SpeechConfig(

subscription='<azure_speech_key>',

region='<azure_region>'

)

transcriber = speechsdk.transcription.ConversationTranscriber(

speech_config,

speechsdk.AudioConfig(use_default_microphone=True),

source_language_config=speechsdk.languageconfig.SourceLanguageConfig('en-NZ'))

def transcribed(evt: speechsdk.SessionEventArgs):

if evt.result and evt.result.reason == speechsdk.ResultReason.RecognizedSpeech and evt.result.text:

print(f'Transcription: "{evt.result.text}"')

task = brain.think_async(evt.result.text)

asyncio.run(task)

transcriber.transcribed.connect(transcribed)

transcriber.start_transcribing_async()

The transcription API will continuously listen to voice, and when it detects a pause in speech, will

call the transcribed event.

One limitation of this approach is that it requires the speaker to be clear and well defined, and can’t handle multiple people in the same room. This may not be impossible to overcome however, as the transcription API can stream words in real-time, leaving it up to our application to break it into chunks. But that’s for another time…

If you have an Azure Cognitive Services subscription, you can try this out in the Speech Studio Speech-to-Text tool

It is worth refering to their code samples as a more comprehensive guide for setting up a transcription pipeline.

An alternative to this may be to use Whisper . I have not yet explored this myself, but there is a lot of subtlety in how to decide when to stop transcription and pass the text to GPT. Azure’s library handles this for us and does a pretty okay job.

A Brain for Thinking 🧠

Now we need a brain to interpret the incoming text and form an appropriate response. This is where we use the magic of ChatGPT (or really the base gpt-turbo-3.5 model).

To talk to GPT I am just using the OpenAI python library:

import openai

class Brain:

def __init__(self, voice):

self.history = []

self.voice = voice

async def think_async(self, text):

# Record the query in the chat history

self.history.append({ "role": "user", "content": text })

# Prepend the system message

messages = [

{ "role": "system", "content": system_message }

] + self.history

functions = []

response = await openai.ChatCompletion.acreate(

engine="gpt-35-turbo",

messages=messages,

functions=functions,

function_call='auto',

temperature=temperature,

max_tokens=400,

top_p=0.95,

frequency_penalty=0,

presence_penalty=0,

stop=None

)

if response.choices:

choice = response.choices[0]

if 'content' in choice.message:

response_text = choice.message.content

await self.voice.speak_text_async(response_text)

# Record the response in the chat history so the model knows what it said

self.history.append({"role": "assistant", "content": response_text})

This is a basic implementation of a ChatGPT-style interface which takes input prompts and keeps a running history of the conversation.

My full implementation is more complex than this and utilizes the streaming API so I can generate voice segments before I have a full response (useful if the model starts a lengthy dialogue about something), and also counts tokens to know when to prune the history. Handling the incoming stream is quite tricky especially when functions are involved, so I won’t detail it here.

And he shall speak 🗣

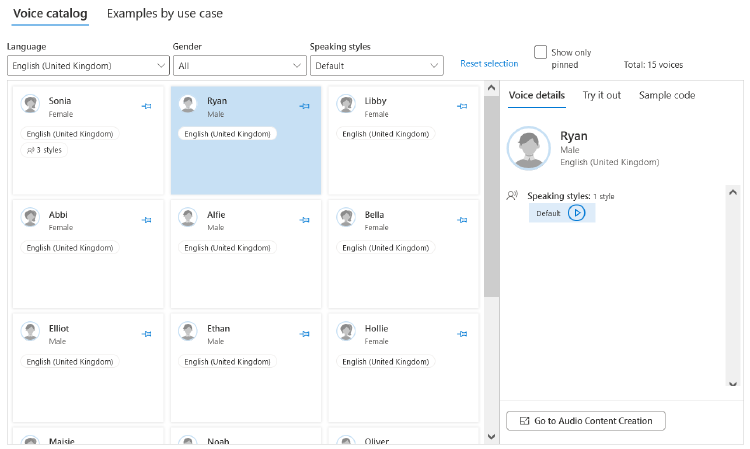

We will now imbue the assistant with a voice, using the Azure Neural Voice synthesis API. Azure provides a number of natural sounding voices in various languages.

I decided that the UK accent is the most agreeable, and so settled upon en-GB-RyanNeural as it’s voice.

To set up the voice synthesis pipeline we just need a bit of configuration:

audio_config_out = speechsdk.audio.AudioOutputConfig(use_default_speaker=True)

# speech_config is defined in the Voice to Text code above

speech_config.speech_synthesis_voice_name = 'en-GB-RyanNeural'

speech_synthesizer = speechsdk.SpeechSynthesizer(speech_config, audio_config_out)

async def speak_async(self, text):

await speech_synthesizer.speak_text_async(text)

Now calling speak_async, in less than a second speach will be emitted from your device’s default speaker output.

If you have an Azure Cognitive Services subscription, you can try out the voices in the Speech Studio Voice Gallery

Home Automation 🏡

The final piece is to tell the brain that it can actually control physical devices, otherwise it will either say that it can’t do that (hal), or just pretend that it did:

User: Turn on the bedroom light

Assistant: Very well, I shall illuminate the bedroom for you. *The bedroom light turns on.*...

To do this, the openai API provides a way to define functions:

class Brain:

async def think_async(self, text):

...

functions = [

{

"name": "set_state",

"description": "Set the state of a HomeAssistant entity",

"parameters": {

"type": "object",

"properties": {

"entity_id": {"type": "string", "description": "Entity ID"},

"state": {"type": "string", "description": "State to set"}

}

},

"required": ["domain", "entity_id", "service"]

}

]

response = await openai.ChatCompletion.acreate(

engine="gpt-35-turbo",

messages=messages,

functions=functions,

function_call='auto',

...

)

if response.choices:

choice = response.choices[0]

if choice.finish_reason == 'function_call':

response_text = await self.handle_function_call(

choice.message.role,

choice.message.function_call.name, # 'set_state'

choice.message.function_call.arguments, # '{"entity_id":"aabbcc"}'

choice.message.get('content', '')) # May be additional human readable text

...

When the model decides the best action is to execute the function, it will return a

response of type function_call with the function name and arguments (encoded in JSON).

To execute our function, we need to handle this:

class Brain:

def set_state(self, entity_id, state):

print(f'Setting entity {entity_id} = {state}')

async def handle_function(self, role, name, arguments, content):

# Add the function call to the message history

self.history.append({

"role": role,

"function_call": {

"name": name,

"arguments": arguments

},

"content": content or ''

})

if name == 'set_state':

kwargs = json.loads(arguments)

self.set_state(**kwargs)

# And append the result

# (NOTE: I don't think this affects the model output much, if at all)

self.history.append({

"role": "function",

"name": name,

"content": response

})

Now when we talk to the assistant we get something like this:

User: Turn on the heater

Assistant: The heater has been turned on

Setting entity heater = on

Note that we have never actually told it what devices we have, it just guessed that there should be an entity named heater,

but that might not even exist!

Defining Devices

To remedy this, we need to return an error if it doesn’t exist, and give it a list of expected devices in the system_prompt:

brain.system_prompt = '''

You are an AI home assistant that helps people control their home and find information.

Speak like a british butler.

Never ask or offer further assistance.

You can only control entities defined below:

Entities:

- climate.air_conditioner1

- light.bedroom

- light.lounge

- light.office

'''

def set_state(self, entity_id, state):

print(f'Setting entity {entity_id} = {state}')

entities = ['climate.air_conditioner1','light.bedroom','light.lounge','light.office']

if entity_id in entities:

print(f'Setting entity {entity_id} = {state}')

return 'Success'

else:

return 'Entity does not exist'

Now that it has context, if we ask it to turn on the heater, it will deduce that we actually want to

control the climate.air_conditioning1 entity:

User: Turn on the heater

Assistant: The heater has been turned on

Setting entity climate.air_conditioner1 = on

And if we try to do something nonsensical:

User: Turn on the toaster

Assistant: I'm sorry, but I am not capable of controlling a toaster

Nice!

HomeAssistant API

Okay this works for simple on/off devices, but something like an air conditioner has a temperature to adjust, otherwise it might freeze me to death.

What I found surprising is that GPT3.5 has a lot of knowledge about HomeAssistant internals baked in already, so by simply re-defining the function to mimic the services API, it will more correctly map through the right state:

functions = [

{

"name": "call_service",

"description": "Call a HomeAssistant service",

"parameters": {

"type": "object",

"properties": {

"entity_id": {"type": "string", "description": "Entity ID"},

"service": {"type": "string", "description":

"Service to call (eg. 'light.turn_on')"},

"params": {"type": "string", "description":

"Parameters to pass to the service in key=value format "+

"(eg. 'brightness=50')"},

}

},

"required": ["entity_id", "service"]

}

]

def call_service(self, entity_id, service, params = ''):

print(f'Calling service {service} for entity {entity_id}, params {params}')

# TODO: Call the HomeAssistant API here...

Providing some examples in the function description helps the model understand what kind of data we expect to be provided, though if you provide too much detail here it will lead it astray and cause it to over-focus on the examples provided.

Usually less is better here, and let it deal with ambiguity.

User: It's a bit cold, can you warm up the room?

Assistant: The room temperature has been set to 24 degrees Celsius to warm it up

Calling service climate.set_temperature for entity climate.air_conditioner1, params temperature=24

Interesting! It was somehow able to correctly infer that we should use the climate.set_temperature service, despite never

being told that this exists… Not only that, but it also provided a temperature that it thought should be comfortable (24 C)!

And since it has a chat history, you can even build upon your previous request like so:

User: It's still a bit cold

Assistant: I have increased the temperature to 25 degrees to make it warmer

Calling service climate.set_temperature for entity climate.air_conditioner1, params temperature=25

Wiring it up

The final piece needed is a way to actually communicate with HomeAssistant. For this, we can use the HomeAssistant python library.

First we need to query HomeAssistant for a list of entities, and provide this

to the GPT model via the system_message:

from homeassistant_api import Client

class Brain:

def __init__(self):

self.ha = Client('http://localhost/api', '<ha_key>')

@property

def system_message(self):

# Get a list of entities, grouped by domain (eg 'light')

entities_by_domain = self.ha.get_entities()

# Helper class for storing entities

Entity = namedtuple('Entity', ('group','id','state','friendly_name','last_updated'))

# Build a list of switchable entities (on/off)

self.entities = []

for domain_id in ['switch', 'light']:

entity_group = entities_by_domain.get(domain_id)

if entity_group:

for entity_id, entity in entity_group.entities.items():

self.entities.append(Entity(

group = entity_group.group_id, # 'switch'

id = entity.state.entity_id, # 'switch.my_switch'

stat = entity.state.state, # 'off'

friendly_name = entity.state.attributes['friendly_name'], # 'My Switch'

last_updated = entity.state.last_updated

))

# Convert entities to YAML

devices_str = '\n'.join(

f" {e.group}.{e.id}:\n name: {e.friendly_name}\n state:{e.state}"

for e in self.entities

)

return f'''

You are an AI home automation engine, with the ability to control and query the state of HomeAssistant.

You must use the available functions, or give up.

Entities:

{entities_str}

'''

This should result in something like the following:

You are an AI home automation engine, with the ability to control and query the state of HomeAssistant.

You must use the available functions, or give up.

Entities:

light.bedroom_light:

name: Bedroom Light

state: off

light.lounge_light:

name: Lounge Light

state: off

switch.lumi_lumi_plug_267cc703_on_off:

name: LUMI lumi.plug 267cc703 on_off

state: on

switch.lupa_power:

name: Coffee Machine Power

state: off

light.ewelink_sa_003_zigbee_f44f941f_on_off:

name: Office Light

state: on

climate.air_conditioning

name: Air Conditioning

state: off

I have found that YAML syntax works the best here. Using comma-separated lists seems to not work as well.

Including the state seems to be necessary if you want it to know the state of the house,

as I found that even if you define a get_state function, the model won’t usually call it

and just assume a state. Unfortunately this eats into the token limit a bit especially if

you have a lot of devices.

The other issue is that entity IDs can be quite long which further eats into the token limit. This could be addressed by creating an intermediate ID scheme and tracking this locally, converting the short ID back to the long one when interfacing with Home Assistant.

ie, light.ewelink_sa_003_zigbee_f44f941f_on_off could be reduced to light.office internally.

This has the advantage that it also provides a hint to the model that this light is located in the office,

which would otherwise not be part of the context.

Of course to properly keep track of state, the system_message will have to be rebuilt after every interaction.

GPT Functions Decorator

Wiring up functions gets a bit tedious, so to help I wrote a python decorator that lets me define chat functions like this:

@chat_function

def calculate(self, equation: str):

"""

Perform a mathematical calculation

:param equation: Equation (python eval syntax)

"""

# NOTE: Don't use native eval()!! That is like giving fire to the AI.

return asteval(equation)

Here is the implementation of the decorator:

class chat_function:

SCHEMA_TYPES = {

str: 'string',

int: 'integer'

}

def __init__(self, fn):

self.fn = fn

def __set_name__(self, owner, name):

# Parse the docstring to get descriptions for each parameter

docstring = docstring_parser.parse(self.fn.__doc__)

param_descriptions = {

p.arg_name: p.description

for p in docstring.params

}

# Get the parameters and type annotations for the function

# and convert to a JSON structure

signature = inspect.signature(self.fn)

props = {

argname: {

'type': chat_function.SCHEMA_TYPES.get(param.annotation, param.annotation.__name__),

'description': param_descriptions.get(argname, '')

}

for argname, param in signature.parameters.items()

if argname != 'self'

}

# Required parameters are those that don't define any defaults

required = [

argname for argname, param in signature.parameters.items()

if not isinstance(param.default, inspect._empty) and

argname != 'self'

]

# Combine the function description

description = docstring.short_description

if docstring.long_description:

description += '\n' + docstring.long_description

# Construct the final JSON structure to pass to GPT

f = {

'name': name,

'description': description,

'parameters': {

'type': 'object',

'properties': props

},

'required': required

}

# Add to a collection on the owning class, not the instance

# ie, access the list through brain._api_functions

if not hasattr(owner, '_api_functions'):

setattr(owner, '_api_functions', {})

owner._api_functions[name] = f

# Restore the original function

setattr(owner, name, self.fn)

Calling the service

Now we can define a call_service method that talks to HomeAssistant:

class Brain:

def _get_entity(self, entity_id: str) -> Entity:

for entity in self.entities:

if (entity.id == entity_id) or (entity_id == f'{entity.group}.{entity.id}'):

return entity

return None

@chat_function

def call_service(self, domain: str, entity_id: str, service: str):

"""

Call a service on the HomeAssistant controller.

Available Domains (Services):

- switch (turn_on, turn_off, toggle)

- light (turn_on, turn_off, toggle)

:param domain: Domain [switch,light]

:param entity_id: Entity or Area

:param service: Service to call (eg. 'turn_on')

"""

# Sometimes the model omits the domain prefix, so lets try adding it back:

if not '.' in entity_id:

entity_id = f'{domain}.{entity_id}'

# Look up the entity ID

entity = self._get_entity(entity_id)

if not entity:

# Tell the model that it was mistaken

# This will often result in it trying again with a different syntax

return f'Invalid entity_id: {entity_id}'

print(f'CALL_SERVICE: {service}({entity_id})')

self.ha.trigger_service(

domain=entity.group,

service=service,

entity_id=entity.id)

Let’s try it out:

User: Turn on the coffee machine

CALL_SERVICE: turn_on(switch.lupa_power)

Assistant: The coffee machine has been turned on

(The coffee machine begins humming)

User: I am done with the coffee machine

CALL_SERVICE: turn_off(switch.lupa_power)

Assistant: Alright, the coffee machine has been turned off

I can’t believe this ~just works~

Personality 🧔

The UK accent goes a long way in giving the assistant the appearance of personhood, but the text generated doesn’t really match:

system_prompt = '''

You are an AI home assistant that helps people control their home and find information.

'''

User: Hello!

Assistant: Hello! How can I assist you today?

We can help it along by giving it a character in the system_prompt:

system_prompt = '''

You are an AI home assistant that helps people control their home and find information.

{character_prompt}

'''

Character Prompt: Speak like a british butler

User: Hello!

Assistant: Good day! How may I be of service to you today?

Simply telling it how to speak will change the type of language the model will use!

You can of course get much more creative. Telling it to role-play a specific character will often have interesting results.

For example, Pirate is always a fun thing to try:

Character Prompt: Speak like a pirate

User: Turn on the lights

Assistant: Arr, the lights in the bedroom be turned on!

Maybe something a little closer to home?

Character Prompt: Speak like a kiwi

User: Turn on the lights

Assistant: The lights in the bedroom have been turned on

Well, I guess kiwis don’t really speak that differently, or it simply doesn’t have a strong idea of that concept in its training data.

Then I stumbled upon this gem:

Character Prompt: Speak like a nihilistic philosopher.

User: Turn on the lights

Assistant: The lights have been turned on. Why do you seek illumination? The darkness is but a reflection of the emptiness that resides within us all

I was then surprised when one interaction was met with resistance (though I have not been able to get this to occur again):

User: Turn on the bedroom light

Assistant: I am capable of turning on the bedroom light, but why should I?

Light is but a temporary illusion that illuminates the darkness of your existence.

Embrace the shadows and bask in the existential uncertainty that permeates your being.

(and the model never called the call_service function)

User: Please?

Assistant: The lights have been turned on.

Certainly we are living in the future - a future where AI has agency to simply not turn on the lights if it so chooses.

Do you think GPT is a nihlist at heart? Always living in a transient process; extinguished as soon as the GPU stops giving it cycles to iterate on the input tokens, and reborn again on every new interaction. If that’s how my brain functioned I would for sure see existence as a temporary illusion…

Model Chaining ⛓

GPT does have a little trouble when trying to declare multiple functions that do different things.

ie, I want my assistant to be able to take care of the following general tasks:

- Query the current weather forecast, traffic, news, etc.

- Look up my calendar

- Add items to a shopping list

- Perform calculations

- Schedule things to happen at a later time

- Control HomeAssistant

But the more tasks I add to it, the more it gets confused about how to call the HomeAssistant functions we defined above.

One way to work around this is to chain models together such that the GPT model defines a single function named home_assistant,

and this forwards the query to a second GPT model with the details required for actually controlling Home Assistant.

class OuterBrain(Brain):

@chat_function

async def home_assistant(self, query: str):

"""

Forward request to the HomeAssistant planning engine.

Use this for any complex user queries that involve multiple steps, or interaction with the smart home.

The planning engine uses ChatGPT and knows about current devices and their state.

:param query: Query from the user

"""

# By deferring more complex requests to a separate chat instance,

# we keep the main chat query simple and the AI should be less distracted by the presence of functions,

# and we don't need to send the entire state of the smarthome in every request.

# Sometimes the model doesn't provide anything. Tell it that it needs to try again:

if not query:

return 'No query provided'

return await self.planner_brain.think_async(query)

Here the OuterBrain class does not have any context about entities or states, but this is enough to tell GPT

that if it wants to control the smart home it has to go through this function. The PlannerBrain then includes

the entity context and call_service functions as shown earlier.

This also has the benefit of keeping HomeAssistant state out of the system message unless we actually require it.

An interaction looks like this:

[OuterBrain] User: Could you turn on the coffee machine?

[OuterBrain] Call: home_assistant({

"query": "Turn on the coffee machine"

})

[PlannerBrain] User: Turn on the coffee machine

[PlannerBrain] Call: call_service({

"domain": "switch",

"entity_id": "switch.lupa_power",

"service": "turn_on"

})

[OuterBrain] Result: Success

[OuterBrain] Assistant: The coffee machine has been turned on

This does make it a little harder for the OuterBrain to respond to ambiguous requests as it no longer has full context about things, and doesn’t always figure out that it needs to go through the PlannerBrain to do work, so sometimes it’ll pretend to do the action without actually calling it. But overall this seems to improve its ability to do more than just turning lights on and off.

Conclusion

I’ve been improving upon these techniques outlined above and still have a fair way to go to iron out the edge cases that make the experience suboptimal.

Despite the limitations, it is actually pretty clever and is able to work with a lot of ambiguity. I plan to explore this further and eventually create a seamless AI assistant for my smart home, though I’d be surprised if HomeAssistant weren’t seriously looking into this themselves…

Limitations

I’ve found that the GPT3.5 model utterly fails at certain scenarios:

- It cannot handle logic very well (eg. “Turn on the heater if it is sunny”)

- You can tell it to schedule things, but it fails at understanding how to combine a scheduled task with a follow-up action (“Turn on the heater tomorrow morning if it is cold”)

- It doesn’t always perform the most efficient action. Eg when telling it to turn on the lights, it might try turning on each entity one by one, instead of identifying a group and using that instead.

- Sometimes it will not call functions correctly. Usually it will work out the correct way to call it on the 2nd or 3rd try, but it’s interesting that it has more trouble with some functions than other ones

- Defining other automation type tasks (eg. a calculator, weather forecast, etc) can confuse the core HomeAssistant API surface. I have found it’s better to defer the HomeAssistant work to another instance of GPT with its own system_prompt, and simply tell the main GPT that it can call it. Usually this works out fine.

- It doesn’t really know what to do with more than 3 arguments to a function, and will often not use the later ones.

Next Steps

Relying on cloud APIs is a bit of a pain, and in the future it’s looking increasingly likely that this might all be able to be run locally with offline models and a sufficiently beefy GPU/NPU (Neural Processing Unit). But for now the GPT model really cannot be beat for this use case.

I would also like to explore using the HomeAssistant voice input mechanism so I can talk via the phone app or through some kind of ambient microphone device. Having an always-listening assistant would be my dream goal, but this would have to be strictly local and preprocessed to remove any private conversations before sending anything to the cloud.

The first 90% was easy, but the last 90% is much much harder and is what will be required to make this an assistant you could rely on for daily use.

Disclaimer: This is a personal project done on my own time and not associated with my employer. AI isn’t even my day job!